Bro Absolutely COOKED With This.

Bro absolutely COOKED with this.

More Posts from Kyn-elwynn and Others

New York

it is weird that celiac stuff has become part of the 'culture war'. because it's literally just a medical thing.... I get super anemic unless I cut a certain protein out of my diet, because it bulldozes the villi in my intestines. but if I post about it, right-wingers send me gore images. I guess you can't expect shitty people to be logical, but I've even heard lefty people make fun of gluten stuff, and it's like why are you mad about this??? why are you pissed off that I'm eating bread that doesn't taste as good so that I can have blood in my body? it's so morally neutral.

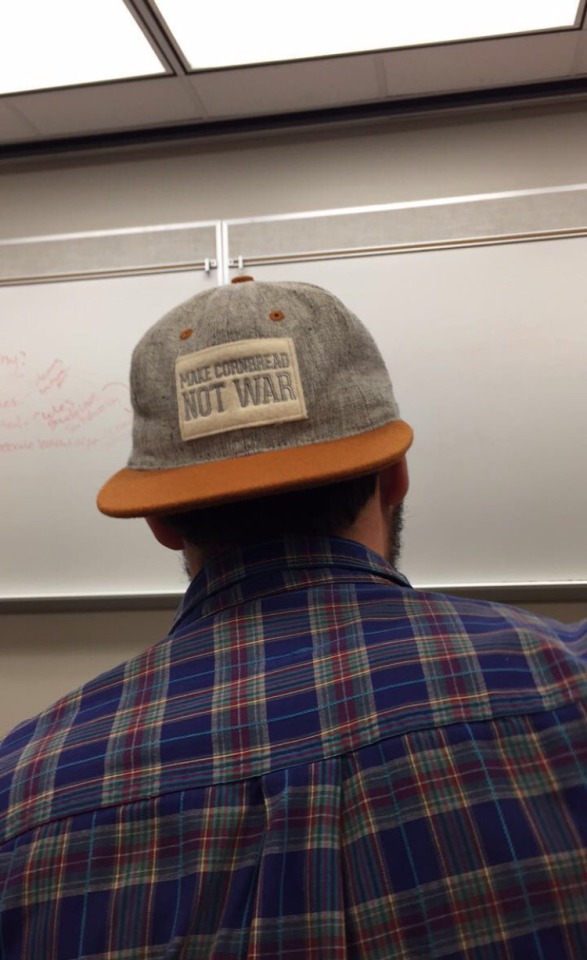

There’s this guy that sits in front of me who you would think is a conservative redneck bc his entire aesthetic is southern lumberjack w boots and denim and hats but he’s actually one of the most inclusive and anti trump guy I’ve ever met and today he wore this hat that sums up his entire personality and I’m screaming.

Don’t judge a book by its cover; make cornbread, not war.

"How can I be a witch/pagan without falling for conspiracy theories/New Age cult stuff?" starter kit

Posts & Articles

Check your conspiracy theory. Does any of it sound like this?

Check your conspiracy theory part two: double, double, boil and trouble.

QAnon is an old form of anti-Semitism in a new package, experts say

Some antisemitic dogwhistles to watch out for

Eugenicist and bioessentialist beliefs about magic

New Age beliefs that derive from racist pseudoscience

The New Age concept of ascension - what is it?

A quick intro to starseeds

Starseeds: Nazis in Space?

Reminder that the lizard alien conspiracy theory is antisemitism

The Ancient Astronaut Hypothesis is Racist and Harmful

The Truth About Atlantis

Why the Nazis were obsessed with finding the lost city of Atlantis

The Nazis' love affair with the occult

Occultism in Nazism

Red flag names in cult survivor resources/groups (all of them are far right conspiracy theorists/grifters)

The legacy of implanted Satanic abuse ‘memories’ is still causing damage today

Why Satanic Panic never really ended

Dangerous Therapy: The Story of Patricia Burgus and Multiple Personality Disorder

Remember a Previous Life? Maybe You Have a Bad Memory

A Case of Reincarnation - Reexamined

Crash and Burn: James Leininger Story Debunked

Debunking Myths About Easter/Ostara

Just How Pagan is Christmas, Really?

The Origins of the Christmas Tree

No, Santa Claus Is Not Inspired By Odin

Why Did The Patriarchal Greeks And Romans Worship Such Powerful Goddesses?

No, Athena Didn't Turn Medusa Into A Monster To Protect Her

Who Was the First God?

Were Ancient Civilizations Conservative Or Liberal?

How Misogyny, Homophobia, and Antisemitism Influence Transphobia

Podcasts & Videos

BS-Free Witchcraft

Angela's Symposium

ESOTERICA

ReligionForBreakfast

Weird Reads With Emily Louise

It's Probably (not!) Aliens

Conspirituality

Miniminuteman

Behind The Bastards

Why I don’t like AI art

I'm on a 20+ city book tour for my new novel PICKS AND SHOVELS. Catch me in CHICAGO with PETER SAGAL on Apr 2, and in BLOOMINGTON at MORGENSTERN BOOKS on Apr 4. More tour dates here.

A law professor friend tells me that LLMs have completely transformed the way she relates to grad students and post-docs – for the worse. And no, it's not that they're cheating on their homework or using LLMs to write briefs full of hallucinated cases.

The thing that LLMs have changed in my friend's law school is letters of reference. Historically, students would only ask a prof for a letter of reference if they knew the prof really rated them. Writing a good reference is a ton of work, and that's rather the point: the mere fact that a law prof was willing to write one for you represents a signal about how highly they value you. It's a form of proof of work.

But then came the chatbots and with them, the knowledge that a reference letter could be generated by feeding three bullet points to a chatbot and having it generate five paragraphs of florid nonsense based on those three short sentences. Suddenly, profs were expected to write letters for many, many students – not just the top performers.

Of course, this was also happening at other universities, meaning that when my friend's school opened up for postdocs, they were inundated with letters of reference from profs elsewhere. Naturally, they handled this flood by feeding each letter back into an LLM and asking it to boil it down to three bullet points. No one thinks that these are identical to the three bullet points that were used to generate the letters, but it's close enough, right?

Obviously, this is terrible. At this point, letters of reference might as well consist solely of three bullet-points on letterhead. After all, the entire communicative intent in a chatbot-generated letter is just those three bullets. Everything else is padding, and all it does is dilute the communicative intent of the work. No matter how grammatically correct or even stylistically interesting the AI generated sentences are, they have less communicative freight than the three original bullet points. After all, the AI doesn't know anything about the grad student, so anything it adds to those three bullet points are, by definition, irrelevant to the question of whether they're well suited for a postdoc.

Which brings me to art. As a working artist in his third decade of professional life, I've concluded that the point of art is to take a big, numinous, irreducible feeling that fills the artist's mind, and attempt to infuse that feeling into some artistic vessel – a book, a painting, a song, a dance, a sculpture, etc – in the hopes that this work will cause a loose facsimile of that numinous, irreducible feeling to manifest in someone else's mind.

Art, in other words, is an act of communication – and there you have the problem with AI art. As a writer, when I write a novel, I make tens – if not hundreds – of thousands of tiny decisions that are in service to this business of causing my big, irreducible, numinous feeling to materialize in your mind. Most of those decisions aren't even conscious, but they are definitely decisions, and I don't make them solely on the basis of probabilistic autocomplete. One of my novels may be good and it may be bad, but one thing is definitely is is rich in communicative intent. Every one of those microdecisions is an expression of artistic intent.

Now, I'm not much of a visual artist. I can't draw, though I really enjoy creating collages, which you can see here:

https://www.flickr.com/photos/doctorow/albums/72177720316719208

I can tell you that every time I move a layer, change the color balance, or use the lasso tool to nip a few pixels out of a 19th century editorial cartoon that I'm matting into a modern backdrop, I'm making a communicative decision. The goal isn't "perfection" or "photorealism." I'm not trying to spin around really quick in order to get a look at the stuff behind me in Plato's cave. I am making communicative choices.

What's more: working with that lasso tool on a 10,000 pixel-wide Library of Congress scan of a painting from the cover of Puck magazine or a 15,000 pixel wide scan of Hieronymus Bosch's Garden of Earthly Delights means that I'm touching the smallest individual contours of each brushstroke. This is quite a meditative experience – but it's also quite a communicative one. Tracing the smallest irregularities in a brushstroke definitely materializes a theory of mind for me, in which I can feel the artist reaching out across time to convey something to me via the tiny microdecisions I'm going over with my cursor.

Herein lies the problem with AI art. Just like with a law school letter of reference generated from three bullet points, the prompt given to an AI to produce creative writing or an image is the sum total of the communicative intent infused into the work. The prompter has a big, numinous, irreducible feeling and they want to infuse it into a work in order to materialize versions of that feeling in your mind and mine. When they deliver a single line's worth of description into the prompt box, then – by definition – that's the only part that carries any communicative freight. The AI has taken one sentence's worth of actual communication intended to convey the big, numinous, irreducible feeling and diluted it amongst a thousand brushtrokes or 10,000 words. I think this is what we mean when we say AI art is soul-less and sterile. Like the five paragraphs of nonsense generated from three bullet points from a law prof, the AI is padding out the part that makes this art – the microdecisions intended to convey the big, numinous, irreducible feeling – with a bunch of stuff that has no communicative intent and therefore can't be art.

If my thesis is right, then the more you work with the AI, the more art-like its output becomes. If the AI generates 50 variations from your prompt and you choose one, that's one more microdecision infused into the work. If you re-prompt and re-re-prompt the AI to generate refinements, then each of those prompts is a new payload of microdecisions that the AI can spread out across all the words of pixels, increasing the amount of communicative intent in each one.

Finally: not all art is verbose. Marcel Duchamp's "Fountain" – a urinal signed "R. Mutt" – has very few communicative choices. Duchamp chose the urinal, chose the paint, painted the signature, came up with a title (probably some other choices went into it, too). It's a significant work of art. I know because when I look at it I feel a big, numinous irreducible feeling that Duchamp infused in the work so that I could experience a facsimile of Duchamp's artistic impulse.

There are individual sentences, brushstrokes, single dance-steps that initiate the upload of the creator's numinous, irreducible feeling directly into my brain. It's possible that a single very good prompt could produce text or an image that had artistic meaning. But it's not likely, in just the same way that scribbling three words on a sheet of paper or painting a single brushstroke will produce a meaningful work of art. Most art is somewhat verbose (but not all of it).

So there you have it: the reason I don't like AI art. It's not that AI artists lack for the big, numinous irreducible feelings. I firmly believe we all have those. The problem is that an AI prompt has very little communicative intent and nearly all (but not every) good piece of art has more communicative intent than fits into an AI prompt.

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2025/03/25/communicative-intent/#diluted

Image: Cryteria (modified) https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0 https://creativecommons.org/licenses/by/3.0/deed.en

It's a heist. Elon is the fraud. DOGE is the fraud. The coders destroying databases are the waste.

people are really fucking clueless about generative ai huh? you should absolutely not be using it for any sort of fact checking no matter how convenient. it does not operate in a way that guarantees factual information. its goal is not to deliver you the truth but deliver something coherent based on a given data set which may or may not include factual information. both the idolization of ai and fearmongering of it seem lost on what it is actually capable of doing

So within two days of each other, Fox News writes an article comparing aromanticism and asexuality to pedophilia, and then Matt Walsh releases a video saying asexuality is a mental illness and asexuals are tricking teenagers into having depression.

Not sure what’s going on right now over in Conservative World, but it’s a hell of wild U-turn for them to suddenly switch from “Oh no! The left is sexualizing our children!” to “Oh no! The left is asexualizing our children!”

-

shruggernaut liked this · 1 month ago

shruggernaut liked this · 1 month ago -

phoenix-jasper liked this · 1 month ago

phoenix-jasper liked this · 1 month ago -

clockworkfall reblogged this · 1 month ago

clockworkfall reblogged this · 1 month ago -

messedupgabriel reblogged this · 1 month ago

messedupgabriel reblogged this · 1 month ago -

messedupgabriel liked this · 1 month ago

messedupgabriel liked this · 1 month ago -

actuallyart3mis reblogged this · 1 month ago

actuallyart3mis reblogged this · 1 month ago -

actuallyart3mis liked this · 1 month ago

actuallyart3mis liked this · 1 month ago -

namelessennes reblogged this · 1 month ago

namelessennes reblogged this · 1 month ago -

namelessennes liked this · 1 month ago

namelessennes liked this · 1 month ago -

tadpoleponders liked this · 1 month ago

tadpoleponders liked this · 1 month ago -

brynnamonroll liked this · 1 month ago

brynnamonroll liked this · 1 month ago -

rainbowfoam reblogged this · 1 month ago

rainbowfoam reblogged this · 1 month ago -

glassphinix liked this · 1 month ago

glassphinix liked this · 1 month ago -

rainbowfoam liked this · 1 month ago

rainbowfoam liked this · 1 month ago -

curiousjt3 liked this · 1 month ago

curiousjt3 liked this · 1 month ago -

likewinteritself liked this · 1 month ago

likewinteritself liked this · 1 month ago -

nailpolishremoverrr reblogged this · 1 month ago

nailpolishremoverrr reblogged this · 1 month ago -

redmcc liked this · 1 month ago

redmcc liked this · 1 month ago -

andthenigetbored reblogged this · 1 month ago

andthenigetbored reblogged this · 1 month ago -

andthenigetbored liked this · 1 month ago

andthenigetbored liked this · 1 month ago -

an-awkward-tangerine liked this · 1 month ago

an-awkward-tangerine liked this · 1 month ago -

pincushionpomegranate reblogged this · 1 month ago

pincushionpomegranate reblogged this · 1 month ago -

pincushionpomegranate liked this · 1 month ago

pincushionpomegranate liked this · 1 month ago -

itsnotmydreamdaditsyours reblogged this · 1 month ago

itsnotmydreamdaditsyours reblogged this · 1 month ago -

lapis-of-lazuli liked this · 1 month ago

lapis-of-lazuli liked this · 1 month ago -

fly1ng0bread liked this · 1 month ago

fly1ng0bread liked this · 1 month ago -

azeliashorridstuff902 liked this · 1 month ago

azeliashorridstuff902 liked this · 1 month ago -

jacynthspetals liked this · 1 month ago

jacynthspetals liked this · 1 month ago -

craftybeebopboop liked this · 1 month ago

craftybeebopboop liked this · 1 month ago -

wetblanket01 liked this · 1 month ago

wetblanket01 liked this · 1 month ago -

palebluegirl liked this · 1 month ago

palebluegirl liked this · 1 month ago -

thingstoshowtomygirls reblogged this · 1 month ago

thingstoshowtomygirls reblogged this · 1 month ago -

potatoattorney reblogged this · 1 month ago

potatoattorney reblogged this · 1 month ago -

potatoattorney liked this · 1 month ago

potatoattorney liked this · 1 month ago -

strawberryjampire reblogged this · 1 month ago

strawberryjampire reblogged this · 1 month ago -

strawberryjampire liked this · 1 month ago

strawberryjampire liked this · 1 month ago -

icylook reblogged this · 1 month ago

icylook reblogged this · 1 month ago -

kev73-blog reblogged this · 1 month ago

kev73-blog reblogged this · 1 month ago -

kev73-blog liked this · 1 month ago

kev73-blog liked this · 1 month ago -

cosmos-chaos reblogged this · 1 month ago

cosmos-chaos reblogged this · 1 month ago -

cosmos-chaos liked this · 1 month ago

cosmos-chaos liked this · 1 month ago -

bacarraroses reblogged this · 1 month ago

bacarraroses reblogged this · 1 month ago -

emmythegoof-blog reblogged this · 1 month ago

emmythegoof-blog reblogged this · 1 month ago -

emmythegoof-blog liked this · 1 month ago

emmythegoof-blog liked this · 1 month ago -

butt-actually liked this · 1 month ago

butt-actually liked this · 1 month ago -

actiasluna reblogged this · 1 month ago

actiasluna reblogged this · 1 month ago -

drbtinglecannon reblogged this · 1 month ago

drbtinglecannon reblogged this · 1 month ago -

milanolltt reblogged this · 1 month ago

milanolltt reblogged this · 1 month ago -

milanolltt liked this · 1 month ago

milanolltt liked this · 1 month ago